The Nobel Committee 2024 Puts the Spotlight on AI and Machine Learning

The buzz and attention on artificial intelligence and machine learning are getting serious, and we can add this year's Nobel committee to the group chat. This week, the Nobel prizes for chemistry and physics both featured work on the development and application of artificial intelligence. While the choice of awardees (and their respective projects) may seem like a trendy one, it's also a serious one highlighting AI's impact on society.

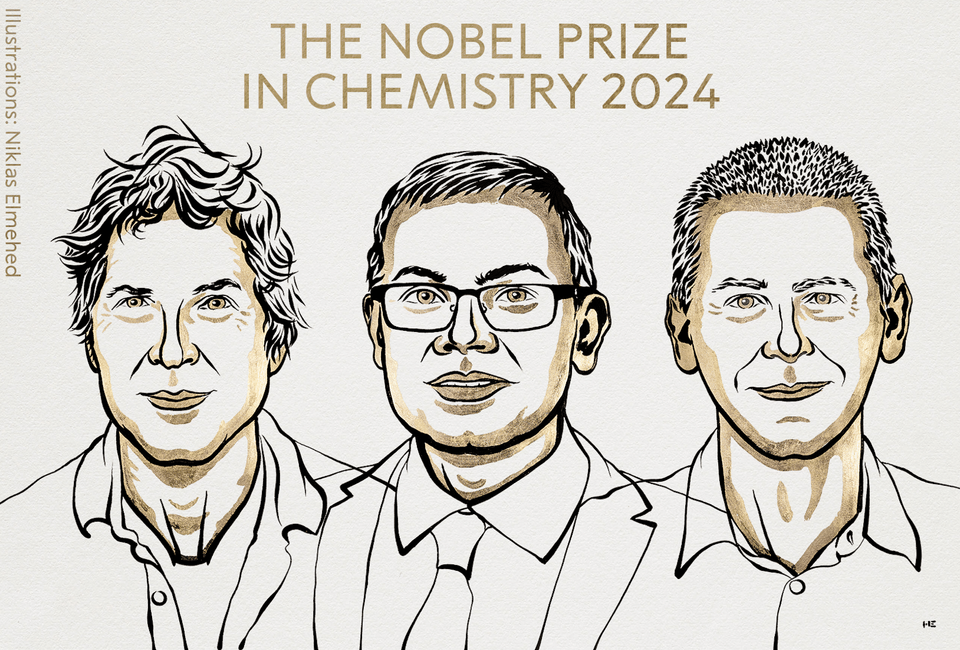

This year's Nobel committee 'has entered the AI' chat by recognizing scientists who have invested significant work and effort into machine learning and artificial intelligence. And what's even more surprising is that technology has been represented in two Nobel prizes: Chemistry and Physics. The Nobel Prize for chemistry was awarded to three scientists— John M. Jumper and Demis Hassabis of Google's DeepMind and a US biochemist, David Baker, who used AI to 'crack the code' of almost all proteins.

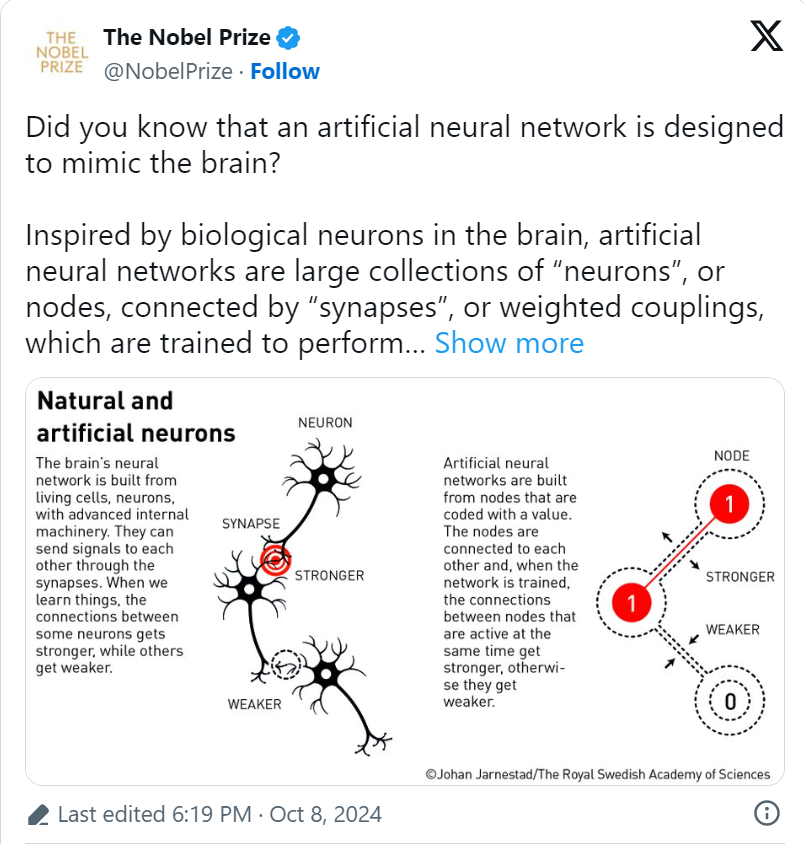

Then, for physics, the committee awarded the Nobel Prize to Geoffrey Hinton and John Hopfield, pioneers in artificial intelligence. They are credited with creating the building blocks of machine learning, which is now reshaping how we work, communicate, design, deliver treatments, and even enjoy entertainment. These two Nobel Prize awards highlight the growing importance of AI and machine learning to different industries.

However, the attention on the technology brings forth a few concerns, including the warning of one of this year's awardees that it also carries significant risks to humanity.

Nobel Prize for Chemistry

This year's Nobel Prize for chemistry was awarded to three scientists who used AI to "crack the code" of almost all known proteins. David Baker, a US biochemist, was recognized for building new proteins. John Jumper and Demis Hassabis, who work at Google DeepMind for their AI model, used to predict proteins' complex structures.

Proteins are the building blocks of life. They help form skin and tissue cells, read, copy, and repair DNA, and carry oxygen in the blood. While proteins are built from just 20 amino acids, they combine in endless ways, transforming into highly complex patterns in three-dimensional space.

Hassabis and Jumper were honored by this year's Nobel committee for using AI to predict the protein's three-dimensional structure, making it possible to predict the structure of almost all 200 million known proteins.

The pair's AI program is called the AlphaFold Protein Structure Database, which now acts as the "Google search" for protein structures, helping researchers in various medical fields. The program was made public, allowing researchers to use this tool for multiple uses and applications.

Nobel Prize for Physics

Geoffrey Hinton and John Hopfield, two pioneers in artificial intelligence, shared the Nobel Prize in Physics. Hinton has Canadian and British citizenship and works at the University of Toronto, while Hopfield works at Princeton University.

According to committee member Mark Pearce, the two scientists are true pioneers (in artificial intelligence) and shared that they did the fundamental work that led to the development and popularity of machine learning and artificial intelligence. The two scientists worked on artificial neural networks primarily used in science and medicine but also have plenty of use cases for the general public, including facial recognition and language translation.

Hinton received the news while staying at a hotel. In a phone interview with the Nobel committee, Hinton shared that he was shocked by the honor and added that he was "flabbergasted." He said he's "worried that the overall consequence of this might be systems more intelligent than us that eventually take control."

It can be remembered that Hinton quit Google in 2023, citing concerns over the flood of misinformation, the possibility of AI affecting the job market, and its 'existential risk' posed by the development of true intelligence. Dr. Hinton and two students at the University of Toronto built a neural net in 2012. He was invited to join Google in 2015 to help develop the company's AI technology, and his work paved the way for the development of today's popular tools, such as ChatGPT.

Concerns over AI continues to grow

The Nobel Prizes for Physics and Chemistry highlight the growing importance of machine learning and generative AI. Many commentators and editorials suggest that AI's choice is trendy and fit for the times. While machine learning and generative AI are today's favored technologies, we can't deny their value and influence in helping several industries. For example, companies rely on these technologies to improve business decisions and automate services that guarantee customer satisfaction.

However, AI's increased popularity and use cases highlight a few risks. This week, during the announcement of Nobel Prize winners, talks on AI's potential risks and challenges became front and center.

Hinton was among the first to appreciate AI's contribution and admit its potential flaws and risks to humanity. In an interview right after the Nobel committee's announcement, Hinton shared that AI's impact could be comparable with the Industrial Revolution. The effect of technology doesn't lie in exceeding people's physical strength but in enhancing their intellectual capacities.

While Hinton gets excited about the possibilities in AI, he also shares his worries, especially if the technology gets out of control. In a recent CNN interview, Hinton said that AI's superhuman intelligence will eventually "figure out how" to manipulate humans to work at its bidding.

In a BBC interview last year, the dangers associated with AI were quite scary, and warned that they could become more intelligent than humans and can be exploited by bad actors.

This isn't the first time that Hinton shared a warning against AI. Nearly a decade ago, he quit Google to speak and share his warnings on AI use freely.

Even the Nobel Prize committee worries about the possible adverse effects of machine learning and generative AI. According to one committee member, AI offers "enormous benefits," but its fast growth poses some threats. He called on humans to use this new technology safely and ethically to benefit humanity ultimately.

Concerns over generative AI are slowly becoming a reality

One of Hinton's warnings in the short term is that people may find it difficult to discern reality from fake reality due to AI-generated content. These concerns have become a reality in recent months, as we started seeing instances when fake content is beginning to dupe online users.

For example, the image of Pope Francis in a Balenciaga puffer coat went viral in 2023. However, this was just a photo-realistic image of the pope using popular image generators like Midjourney. Then, there were the AI-generated photos of Donald Trump that made headlines, and even Taylor Swift was the subject of these AI-generated images, which have gone viral due to their content.

What's next for machine learning and generative AI?

Now that the Nobel Prizes have been awarded with AI as one of the biggest winners, what can we expect next? One thing is sure: the recognition awarded to AI pioneers elevates the technology to new scientific heights. The technology, once the exclusive domain of theorists and academics in universities and engineers in Silicon Valley, is now accessible to the general public. This technology is no longer a complex and theoretical concept, at least some parts of it, since we now enjoy plenty of use cases daily.

Among AI's use cases, we can truly feel its benefits in generative AI, particularly with chatbots and image generators. And as we all know now, this technology is redefining work, communication, and our way of life. Now that machine learning and generative AI have received the formal recognition it deserves, it is time to move to the next phase. The next steps must focus on making AI safe and reliable and promoting human values.

One of Hinton's concerns is that AI can be manipulated by bad actors or, worse, can become more intelligent than humans, ultimately controlling its developer to achieve its aims. As such, what industry (and the general public) needs now are rules and regulations that promote AI's responsible and ethical use.

It's a turning point for AI and the industry; AI has proved its point and use cases, and it's time to address the potential risks. And it becomes possible when everyone participates in making machine learning and generative AI a tool for humanity.