Meta Announces New Rules on Deep Fakes and Altered Media

Deep fakes, a portmanteau of deep learning and fake, first appeared in November 2017 when an anonymous Reddit user shared an algorithm that uses existing artificial intelligence algorithms to generate fake videos. Soon, other users shared the code online, where it became publicly available, making programming easier. While the new technology has benefits, several associated dangers push some organizations to act.

Deep fakes have grown in popularity in recent years, and for most, they're fun to use and highly entertaining. Some of these images have gone viral, too, including a few deep fakes released in 2023, which impressed, amused, and entertained the general public. If you're a regular social media user, you probably have encountered the 'Balenciaga Pope,' which shows Pope Francis wearing a huge white puffer jacket that went viral last March. The internet was delighted and amused to see the pope upgrading his sartorial options. However, the appreciation was short-lived when many learned that this image was created by a 31-year-old worker using Midjourney.

Then, fans of The Weekend and Drake got an exciting treat last April 2023 with a fiery collab called "Heart on My Sleeve." Except that the "collab didn't happen" since the music was AI-generated. Yes, it was catchy, but the song went viral because of the ongoing concerns that generative AI poses to the music industry. The use of deep fakes and AI-generated images can be political, too. Presidents Donald Trump and Barack the US have become the subject of fake images and have been used in many disinformation campaigns, raising concerns about the use of technology. The growing clamor for regulation has led Meta, formerly Facebook, Inc., to update its policies on handling AI-generated content and manipulated media.

Meta faces growing concerns about altered media

Meta's latest announcement is instructive in the latest efforts to address the associated dangers of deep fakes, AI-generated images, and fake news. Facebook, a popular social media networking site, is seen as the worst perpetrator for spreading fake news. One research conducted by a team from Princeton University found that Facebook is the primary referrer site for untrustworthy news sources over 15% of the time. So, Meta's latest announcement is a response to the growing problem of deep fakes and altered media on its platform.

Key takeaways from Meta's latest policy change

In a blog post shared last April 5th and written by Monola Bickert, Meta's Vice President for Content Policy, the company recognizes the growing problem and proposed several policy changes:

- Meta plans to introduce changes on how the platform will handle manipulated media based on the feedback from the Oversight Board and based on expert consultations and results of public opinion surveys;

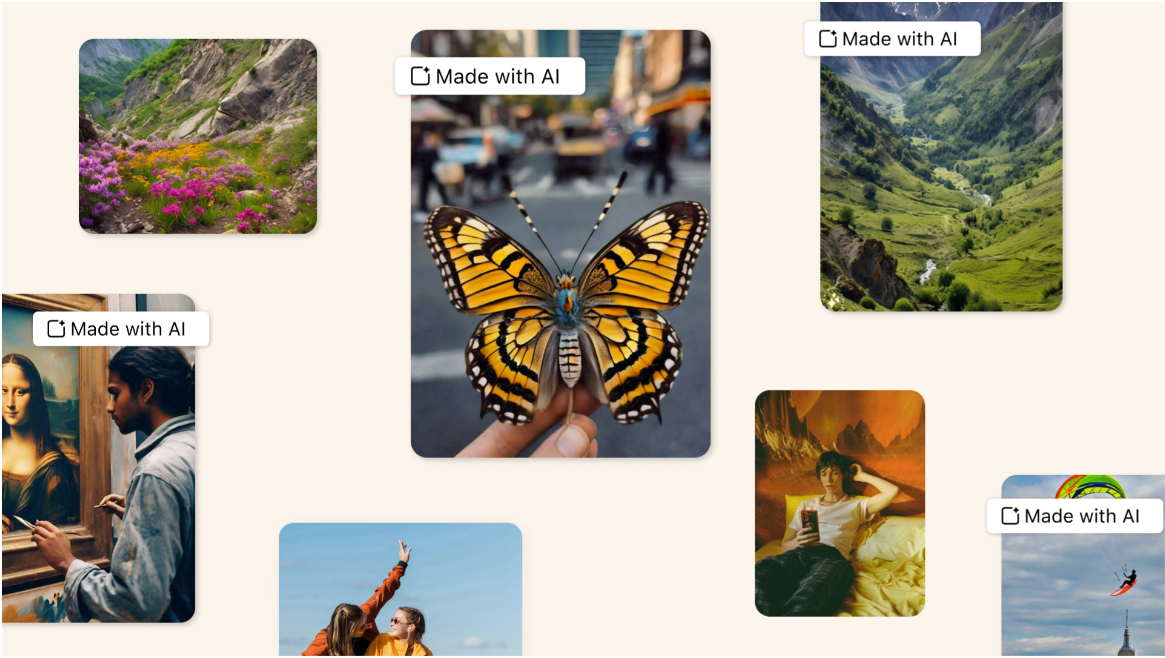

- The company plans to start labeling a wider range of video, audio, and image contents as "Made with AI" once its team detects industry-standard AI image indicators or when users disclose that these uploads are AI-generated contents;

- Meta agrees with the Oversight Board's recommendations to provide transparency and additional contexts on the platform to address manipulated media issues while avoiding restricting freedom of speech by adding labels and contexts.

In the blog, the company admits the inadequacy of its policy on handling these types of content, arguing that it was drafted at a time when AI technology was in its infancy. Ms. Bickert writes that their "manipulated media policy was written in 2020 when realistic AI-generated content was rare and the overarching concern was videos". She added that there have been different kinds of realistic AI-generated photos and audio in the last four years, and the technology is growing fast.

The company also explains that it cannot immediately remove manipulated media that don't violate its "Community Standards," which unnecessarily risk restricting freedom of expression. Faced with concerns about the associated effects of altered media, Meta decides to adopt a "less restrictive" approach by adding labels with contexts. The labels shall be decided based on detecting industry-shared signals of AI images or if users disclose that their uploads are AI-generated. Meta has announced that they will start labeling contents in May 2024.

Deep fakes and AI-generated content

Deepfakes refer to fake or forged videos or images generated using deep learning, a form of artificial intelligence in which a person's likeness, like voice and face, can be realistically swapped with someone else's. It's our generation's version of photoshopping, but better, more realistic, and potentially dangerous.

This technology first appeared online in November 2017 when an anonymous Reddit user shared the technology that harnesses existing artificial intelligence algorithms to generate fake videos. Then, other Reddit users share the code on Github, making it more popular and useful for various applications.

Deepfakes aren't AI-generated videos. The technology can also be used to create fictional photos, generate audio, or even a song, just like they did on the supposed The Weekend and Drake collab. Recently, there have been reports of fake LinkedIn profiles, complete with pictures and personal information, which were thought to be used for spying operations. Fraudsters also use the technology to fake audio recordings or create voice clones. This German energy firm paid nearly £200k into a Hungarian bank account after being phoned by a fraudster who copied the German CEO's voice. The company's insurers say the voice was deep fake, but their evidence was unclear.

While deep fakes may not necessarily create international disasters and conflicts, they can still create mischief and spread disinformation. However, many experts and insiders say these aren't the real costs of using deepfakes and AI-generated images. The real problem lies in the creation of a "zero-trust society" where we can no longer bother to distinguish truth from falsehood. And there's no longer trust; it's easier to cast doubts on specific events, actions, and persons.

Addressing deep fakes and AI-generated content

Governments, businesses, and organizations now recognize generative AI technology's growing benefits and possible costs. To address its associated dangers, several organizations have been pushing for programs and legislation that will help address and deter the production of deepfakes, especially the ones that misappropriate identities for pornographic purposes. This proposed legislation aims to create a criminal liability for those who generate and distribute deepfakes for this purpose.

Many websites are also taking notice and doing their share in stopping the proliferation of deepfakes and fact-checking information shared online. Meta's decision to start adding contexts and labels on AI-generated content is a step in the right direction when fighting the dangers posed by deepfakes. As the owner and operator of the biggest social media platform, Meta's policy is a good step in the right direction.

While its specific policy includes steps that it thinks are appropriate for its platform, it still values its industry peers and other stakeholders. As such, Meta has also announced that it will continue collaborating with its peers and joining dialogues with civil society and governments, and promises to "review our approach as technology progresses."