California's AI Safety Bill- Here's What We Know So Far

Amid the excitement and promise of Artificial Intelligence (AI) and generative AI, there are potential risks. As a response to the growing safety concerns over the use of the AI technology, a few developers have been integrating guardrails into their projects to ensure that their generative AI techology is built on trust. And governments are making their moves to make generative AI safer, or at least introduce accountability into the growing industry. California is the first state to introduce an AI Safety Bill which aims to regulate the technology.

Artificial Intelligence (AI) and generative AI are a major storyline in the last two years. This popular technology has captured the imagination of the general public, and thrilled tech companies. According to multiple studies and surveys, interest and investment in AI has increased dramatically in the last decade. For example, Stanford University estimates the total assets and acquisitions related to AI amounted to $934.2 billion from 2013 to 2022. Stanford added that in 2021, the investment hit $276.1 billion with the introduction of ChatGPT. And by 2025, it's estimated that the global AI investment is expected to hit over $200 billion, with OpenAI (ChatGPT's developer) as the primary spender. The excitement and support for this technology is understandable.

Artificial Intelligence (AI) is one of the fastest-growing technologies right now, and it's currently disrupting workflows and traditional businesses. With ChatGPT and generative AI leading the charge, AI is powering digital assistants, automating image and video creation, and promoting a more efficient manufacturing process. But AI and generative AI's dramatic burst into the scene comes at a cost.

Many experts, users, and observers are grappling with the potential costs of using AI. This has led many developers to incorporate guardrails as guidelines, allowing project streams to build generative capabilities based on reliability and trust.

But it seems that the developers' guardrails aren't enough to calm the nerves of some experts, observers, and stakeholders, particularly in the government. Now, the government is looking at a more formal regulation to ensure that generative AI becomes safer for its users.

Late last year, it was reported that a California lawmaker will file a bill to make generative AI models 'more transparent', and start the conversations on its regulation. Senator Scott Wiener (D) drafted a bill that aims to require large language models to meet specific transparency standards. In addition, it was reported last year that the bill includes proposed security measures that will keep AI out from the reach of 'foreign states', and adds a line that recommends that the establishment of an AI research enter outside of the 'Big Tech'.

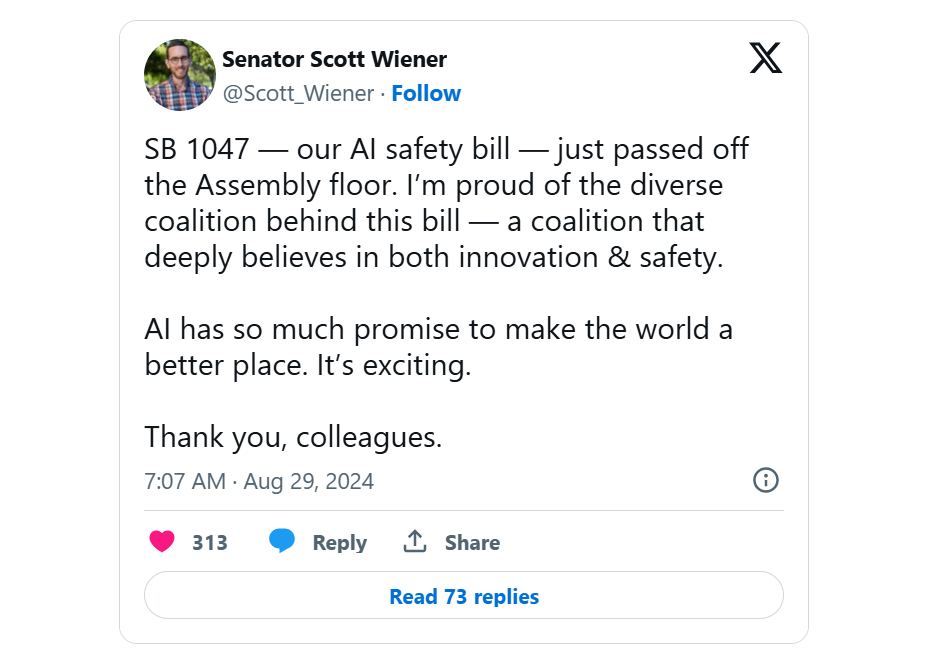

Update on AI Safety Bill- California's legislature passes sweeping AI safety bill

It's official; the state's legislature has passed the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act or SB 1047. The bill is now at Governor's Newsom's desk, and it's ready to be signed into law anytime. Senator Wiener shared the news over at X, saying that a 'diverse coalition', and that this bill (and when passed into a law) can "make the world a better place".

SB 1047 faced a few critics, and some surprising supporters

Some of the critics of the bill are OpenAI and Anthropic, politicians Nancy Pelosi and Zoe Lofgren, and the state's Chamber of Commerce. According to this group, the bill is focused too much on 'catasthrophic harm', which can ultimatel affect the small players, including the open-source AI developers. OpenAI, one of the biggest players in the AI industry has even filed a letter to express its concerns over the bill.

In a letter dated August 21st, 2024, Jason Kwon, OpenAI chief strategy officer argued that the regulation of the technoloy should be "left to the federal government". He added that this AI safety bill can potentially slow down the progress of the technology, and may cause tech companues to leave the state.

Mr. Kwon stated that a "federally-driven set of AI policies, rather than a patchwork of state laws, will foster innovation and position the U.S. to lead the development of global standards." As such, the company joins other AI labs, experts and other politicians in "respectfully oppossing SB 1047". This letter was addressed to the bill's proponent, Senator Wiener.

The Office of Senator Wiener and the bill's other proponents immediately offered justification for the bill. According to the proponents, the bill aims to set standards in preparation for the developoment of more powerful AI models, and it also requires precautions, like the adoption fo safety tests and other guardrails, and a mention of 'whistleblower protection' for employees in case legal action shall be taken against developers and corporations.

Finally, they argue that this bill calls for the institutionalization of a 'public cloud computer cluster' called CalCompute. In response to OpenAI's letter, Wiener clarifies that the requirements apply to any company operating in the state. The senator adds that OpenAI "doesn't criticize a single provision of the bill", and further explains that it's a reasonable bill and only asks companies and developers to do what they have committed to doing.

After amendment, SB 1047 gets wider support

The bill was amended in response to the growing concerns and complaints from some stakeholders. Before the vote, the proponents replaced potential crime penalties with civil ones, limiting the enformance powers of the state's attorney general, and modifying the requirements to join "Board of Frontier Models" as indicated on the bill.

Aside from OpenAI, Anthropic is another major player in the growing AI and generative AI industry. Anthropic CEO Dario Amodei also sent a letter to the office of Governor Newsom. In this letter, Amodei metioned that the bill has "substantially improved", saying that its benefits now outweigh the costs after making the amendments. OpenAI declined to comment on the amendements and instead pointed to the company's original letter sent to the office of Senator Wiener.

Although there were plenty of critics, there were a few surprising support for the bill. Elon Musk, the tech billionaire who controls X and Grok, is one of the first to raise concerns over the danger of AI. In a post on X, Mr. Musk added his thoughts on the growing AI risk and safety conversations by sharing that the state should probably pass the AI Safety Bill. Musk adds that, "For over 20 years, I have been an advocate for AI regulation", explaining that it should be a practice (referring to regulation) for any technology or product with potential risk.